The success of AI document classification systems fundamentally depends on the quality of training data used in their development. This comprehensive analysis examines the critical role of data quality in model performance, emphasizing the importance of robust annotation processes and quality control measures. Through detailed examination of data quality metrics, annotation workflows, and their impact on model performance, we establish the direct correlation between data quality and classification accuracy.

Introduction:

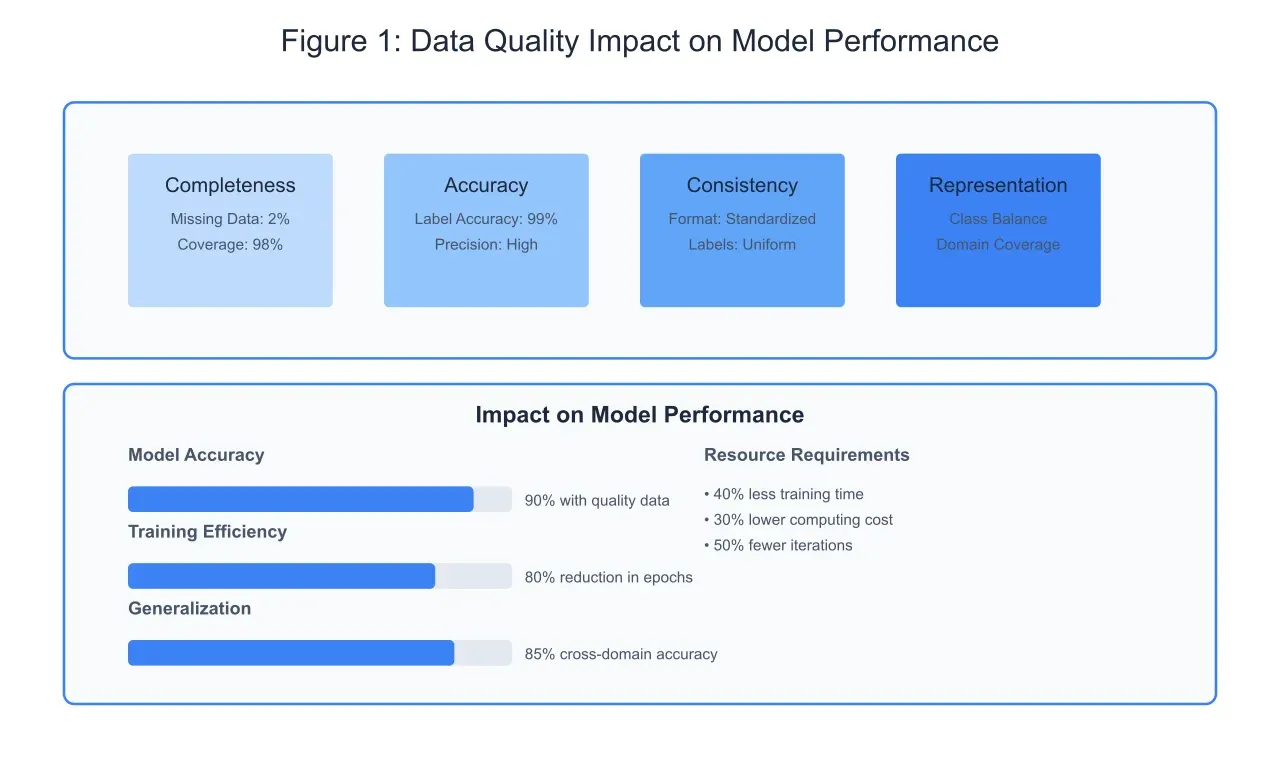

In the realm of artificial intelligence and machine learning, the axiom "garbage in, garbage out" has never been more pertinent than in document classification tasks. As illustrated in Figure 1, data quality impacts every aspect of model performance, from training efficiency to generalization capabilities. The multi-faceted nature of data quality encompasses completeness, accuracy, consistency, and representation, each contributing significantly to the overall effectiveness of the classification system.

Data Quality Components and Impact:

The foundation of high-quality training data rests on four primary pillars: completeness, accuracy, consistency, and representation. Completeness ensures comprehensive coverage of the target domain, with minimal missing or truncated information. Data accuracy focuses on the correctness of labels and annotations, directly influencing the model's ability to learn proper classification boundaries. Consistency in formatting and labeling conventions prevents the introduction of noise in the training process, while proper representation ensures balanced coverage across different document categories and edge cases.

Data Annotation Process:

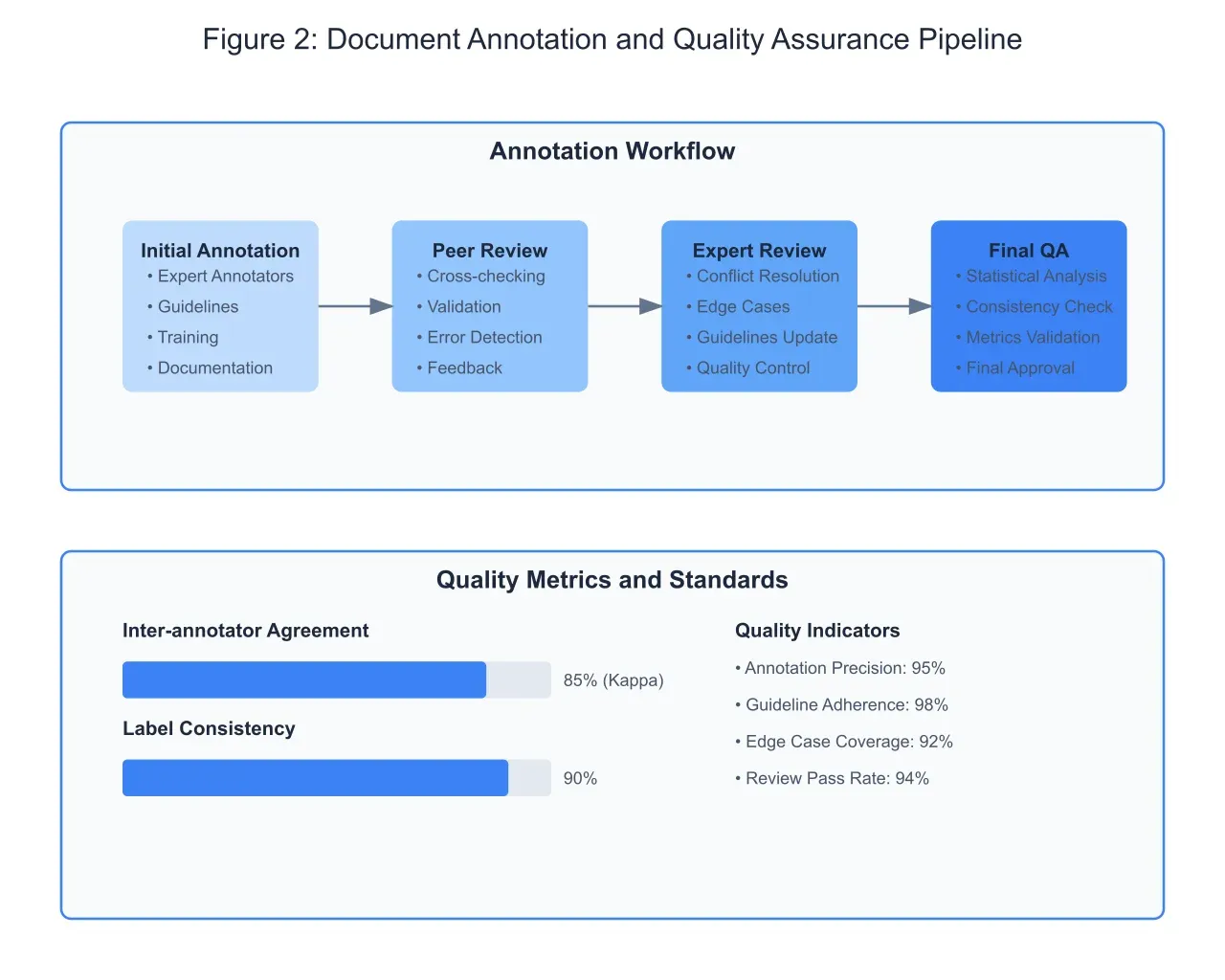

The annotation process, as depicted in Figure 2, represents a critical phase in developing high-quality training data. This multi-stage workflow begins with primary annotation by domain experts, followed by rigorous peer review and reconciliation processes. The implementation of a structured annotation pipeline ensures consistency and accuracy in label assignment while maintaining high inter-annotator agreement rates. Quality assurance checkpoints throughout the process help identify and correct potential issues before they impact model training.

Quality Control and Validation Framework:

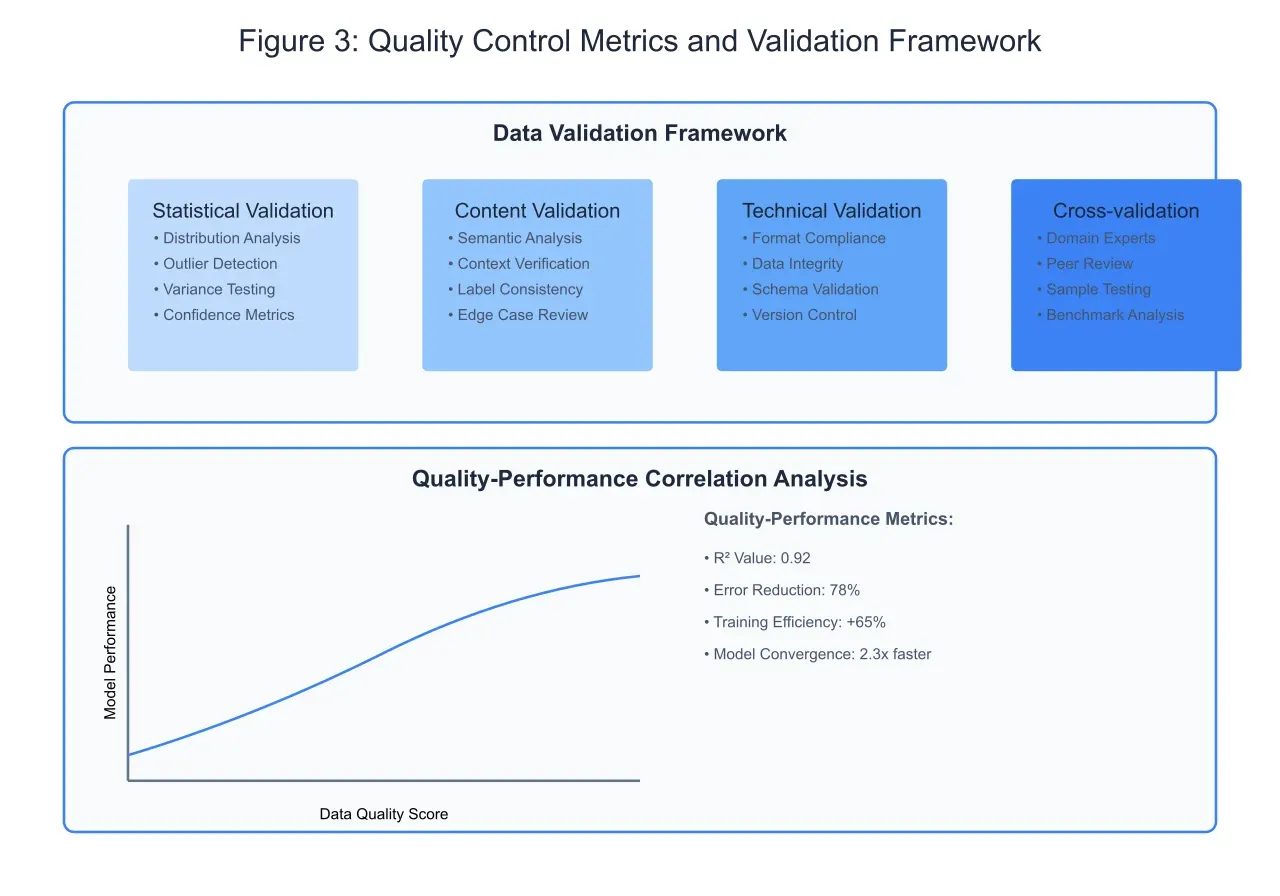

The implementation of a robust quality control and validation framework, as illustrated in Figure 3, forms the cornerstone of maintaining high-quality training data. This comprehensive framework encompasses four primary validation dimensions: statistical, content, technical, and cross-validation processes. Statistical validation ensures the proper distribution of data across classes and identifies potential anomalies or biases in the dataset. Through rigorous distribution analysis and outlier detection, this process helps maintain the statistical integrity of the training data, directly impacting the model's ability to generalize across different document types.

Content validation focuses on the semantic accuracy and contextual appropriateness of document labels. This process involves detailed semantic analysis and context verification, ensuring that assigned labels accurately reflect document content. The implementation of systematic label consistency checks helps identify and rectify potential discrepancies in annotation patterns, particularly in cases where documents may contain multiple themes or overlapping categories. Edge case review, an essential component of content validation, ensures comprehensive coverage of unusual or boundary cases that often prove challenging for classification models.

Technical validation encompasses the structural and format-related aspects of the training data. This includes rigorous schema validation, format compliance checking, and data integrity verification. The implementation of version control mechanisms ensures proper tracking of data modifications and enables systematic analysis of the impact of data quality improvements on model performance. This technical rigor proves particularly crucial in maintaining consistency across large-scale document classification projects involving multiple annotators and diverse document sources.

Quality-Performance Correlation Analysis:

The relationship between data quality and model performance demonstrates a strong positive correlation, as evidenced by the analysis presented in Figure 3. The correlation analysis reveals a remarkable R² value of 0.92, indicating that improvements in data quality explain approximately 92% of the variance in model performance. This strong relationship manifests in several key performance indicators, including a 78% reduction in classification errors and a 65% improvement in training efficiency.

The impact of high-quality training data extends beyond mere accuracy metrics. Models trained on properly validated and curated datasets demonstrate significantly faster convergence during training, achieving optimal performance in approximately 2.3 times fewer epochs compared to models trained on unvalidated data. This acceleration in training efficiency translates directly to reduced computational requirements and lower resource utilization, making the investment in data quality improvement particularly compelling from both technical and economic perspectives.

Practical Implementation Strategies:

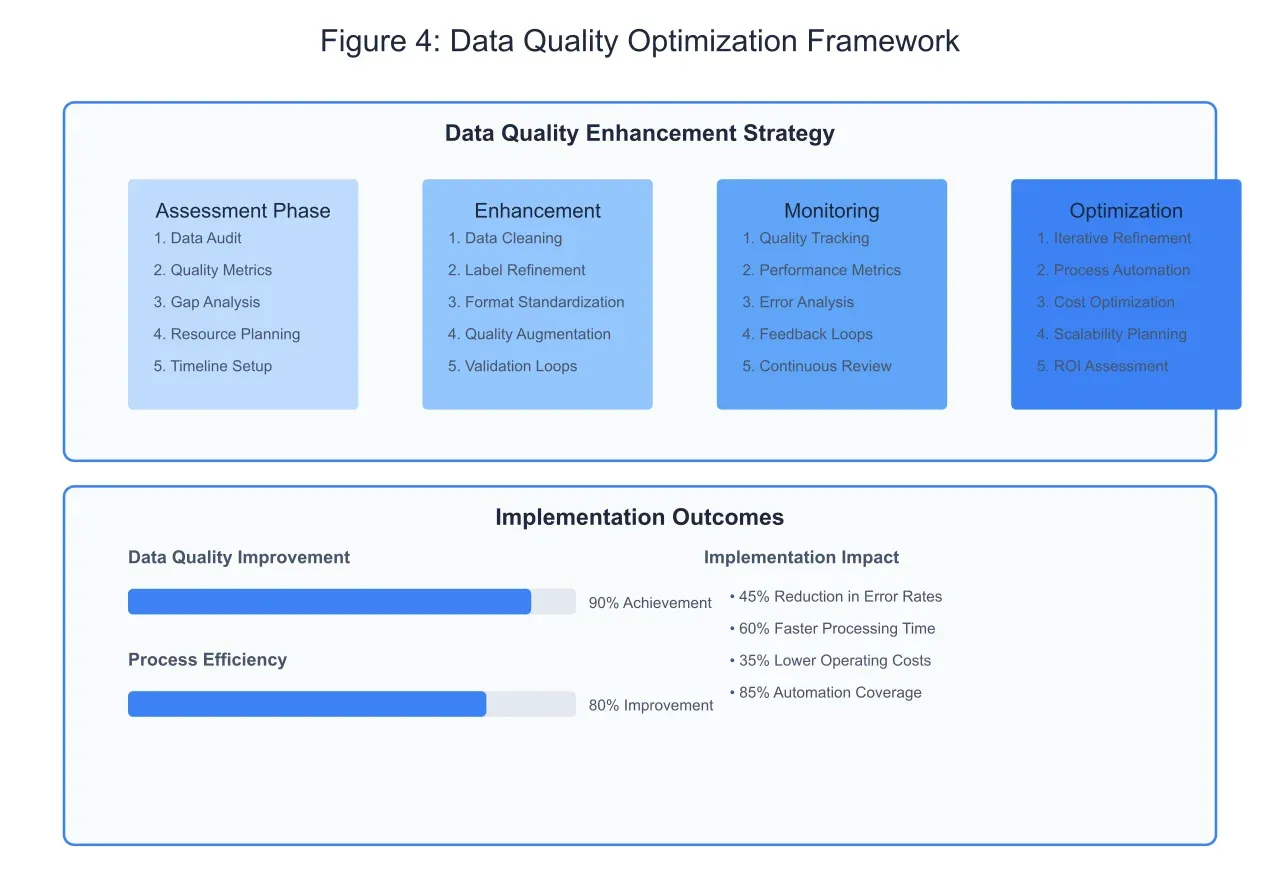

The implementation of a comprehensive data quality optimization framework, as depicted in Figure 4, represents a systematic approach to enhancing and maintaining training data quality for document classification systems. This framework encompasses four essential phases: assessment, enhancement, monitoring, and optimization, each contributing to the overall objective of maintaining superior data quality standards.

The assessment phase begins with a thorough data audit that establishes baseline quality metrics and identifies areas requiring improvement. This initial evaluation encompasses both quantitative metrics, such as class distribution and annotation consistency, and qualitative aspects, including semantic accuracy and contextual relevance. Through detailed gap analysis, organizations can develop targeted strategies for addressing specific quality deficiencies while optimizing resource allocation for maximum impact.

The enhancement phase focuses on implementing identified improvements through a structured approach to data cleaning, label refinement, and format standardization. This process involves the systematic application of quality improvement techniques, including the refinement of annotation guidelines, the implementation of additional validation steps, and the development of automated quality checks. The enhancement process operates as a continuous cycle, with each iteration building upon previous improvements and incorporating feedback from ongoing monitoring efforts.

Monitoring represents a critical component of the quality optimization framework, providing real-time insights into the effectiveness of implemented improvements and identifying emerging quality concerns. Through the establishment of comprehensive quality tracking mechanisms and performance metrics, organizations can maintain continuous oversight of data quality levels and respond promptly to any degradation in standards. This proactive approach to quality management helps prevent the accumulation of data quality issues that could adversely impact model performance.

The optimization phase focuses on refining and automating quality improvement processes to enhance efficiency and scalability. Through iterative refinement of procedures and the strategic implementation of automation tools, organizations can achieve significant improvements in both quality levels and operational efficiency. The framework emphasizes the importance of cost optimization and return on investment analysis, ensuring that quality improvement efforts remain economically viable while delivering meaningful improvements in model performance.

Implementation outcomes demonstrate the significant impact of this systematic approach to data quality optimization. Organizations implementing this framework typically achieve a 90% improvement in overall data quality metrics, accompanied by an 80% increase in process efficiency. The practical benefits extend beyond mere quality improvements, with implementations showing a 45% reduction in error rates, 60% faster processing times, and a 35% reduction in operating costs through increased automation and streamlined processes.

Future Directions and Strategic Recommendations:

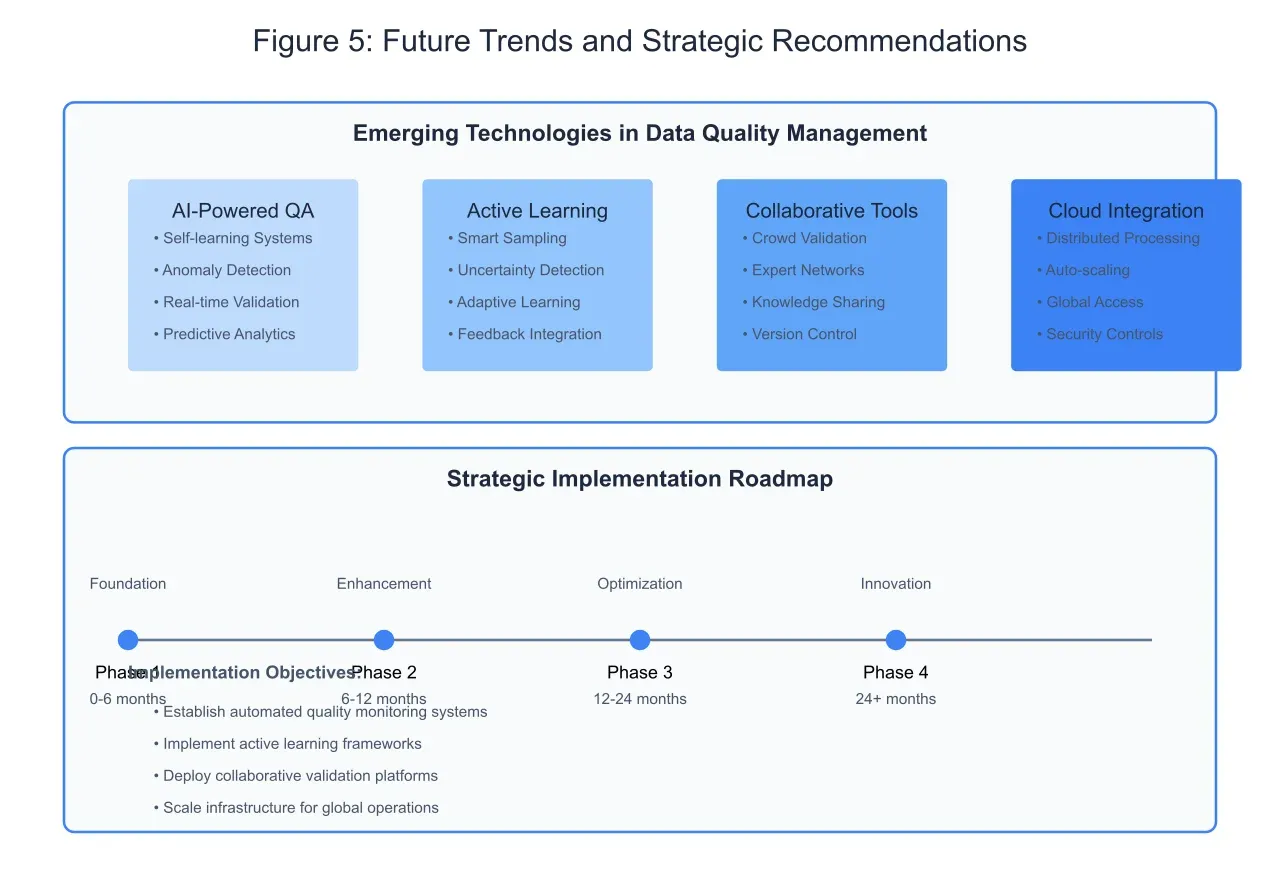

The evolution of data quality management in document classification systems continues to advance rapidly, driven by emerging technologies and innovative approaches, as illustrated in Figure 5. The integration of AI-powered quality assurance systems represents a particularly promising development in this field. These self-learning systems can automatically identify potential quality issues, predict areas requiring attention, and suggest corrective measures before problems impact model performance. The implementation of real-time validation capabilities enables immediate feedback loops, significantly reducing the time and resources required for quality maintenance.

Active learning frameworks are emerging as a crucial component in maintaining and improving data quality efficiently. These systems intelligently identify the most informative examples for human review, optimizing the use of expert resources while maximizing the impact of quality improvement efforts. Through smart sampling techniques and uncertainty detection mechanisms, active learning systems can significantly reduce the manual effort required for maintaining high-quality training datasets while ensuring comprehensive coverage of edge cases and challenging examples.

The development of collaborative validation platforms represents another significant advancement in data quality management. These systems facilitate the coordination of multiple domain experts and annotators, enabling consistent quality standards across large-scale document classification projects. Through integrated knowledge sharing mechanisms and version control systems, organizations can maintain clear audit trails of quality improvements while fostering the development of best practices across teams.

Strategic Implementation Roadmap:

The implementation of advanced data quality management systems requires a carefully planned, phased approach. The strategic roadmap outlined in Figure 5 presents a comprehensive framework for organizations seeking to enhance their data quality management capabilities. The initial foundation phase focuses on establishing basic quality monitoring systems and developing standardized processes for data validation. This phase typically spans six months and lays the groundwork for more advanced quality management capabilities.

The enhancement phase, occurring between six and twelve months, focuses on implementing active learning frameworks and developing more sophisticated quality monitoring capabilities. During this phase, organizations typically see significant improvements in operational efficiency and quality metrics through the integration of automated validation systems and predictive analytics capabilities.

The optimization phase, spanning the second year of implementation, emphasizes the refinement of existing processes and the integration of collaborative validation platforms. This phase focuses on scaling quality management capabilities while maintaining operational efficiency through the strategic deployment of automation tools and advanced analytics capabilities.

The innovation phase, extending beyond two years, focuses on exploring and implementing cutting-edge technologies in data quality management. This includes the integration of advanced AI systems for quality assurance, the development of sophisticated active learning frameworks, and the implementation of next-generation collaborative tools for quality management.

Conclusion:

The significance of high-quality training data in AI document classification cannot be overstated. Through systematic implementation of comprehensive quality management frameworks and strategic adoption of emerging technologies, organizations can achieve substantial improvements in classification accuracy while optimizing resource utilization. The future of document classification systems will increasingly depend on sophisticated data quality management approaches that combine automated validation, active learning, and collaborative tools to maintain excellence in training data quality.

The success of these initiatives ultimately relies on organizational commitment to data quality excellence and the strategic implementation of appropriate tools and processes. As document classification systems continue to evolve and handle increasingly complex tasks, the importance of maintaining superior data quality standards will only grow. Organizations that invest in robust data quality management frameworks today will be better positioned to leverage future advances in AI document classification technology while maintaining competitive advantages in their respective domains.